How to recover lost Kafka messages and reprocess them safely

Problem

A production Kafka consumer had a subtle bug that caused it to commit offsets without actually processing the messages. As a result, Kafka marked those messages as consumed even though they were never handled by the application.

This meant:

- The consumer group “caught up” in Kafka’s eyes,

- But the downstream systems never saw those events.

Is there a way to reprocess those messages that weren’t processed?

Considerations

Before jumping into a fix, it’s worth stepping back and asking a few key questions to understand the scope and risk of reprocessing. These considerations will help you decide whether you need a full replay , targeted recovery or a controlled offset reset

Primary Considerations

- Is your Kafka consumer idempotent? Can it safely reprocess the same message more than once without unintended side effects?

- Is message ordering important? Could uncontrolled reprocessing violate sequencing guarantees, requiring a more cautious replay strategy?

- Are your downstream systems tolerant to duplicates? Could replaying messages cause duplicate payments, orders, or other side effects in external systems?

Secondary Considerations

- What’s your retention window? Ensure unprocessed messages still exist in the Kafka topic. If retention has expired, you’ll need to recover from another data source.

- How will you validate after recovery? Plan how to confirm data consistency post-reprocessing—via counts, checksums, or business-level verification.

- When did the issue start, and how consistent was it? If the bug began three hours ago, was it intermittent—dropping only some messages—or did it fail to process all messages during that period?

- Do you have visibility into what was processed? Use logs, metrics, or audit trails to confirm which messages were successfully handled. This helps narrow the replay window.

Scenario 1 – Safe Full Replay for Idempotent Consumers (Event order doesn’t matter)

Problem characteristics:

- The Kafka consumer and the downstream components are idempotent.

- Reprocessing messages—even those already successfully processed—will not create duplicates or undesired side effects.

- Ordering requirements are not strict.

Recommended steps:

- Pause or isolate the consumer to prevent committing new offsets during replay.

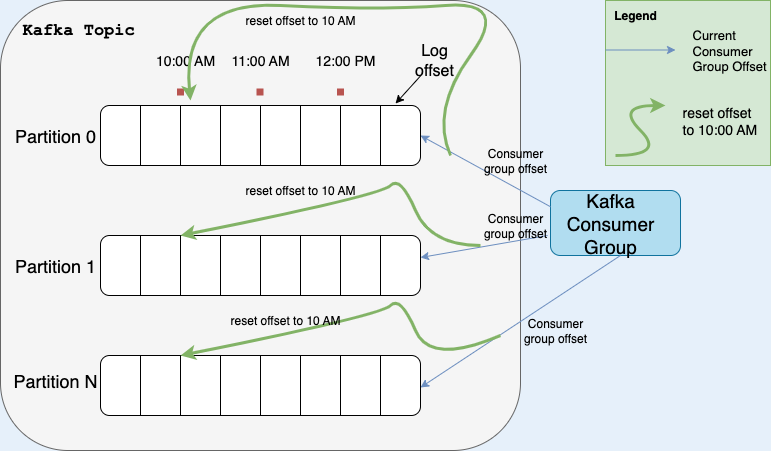

- Reset offsets to the start of the affected period using Kafka toolsNote: the to-datetime needs to be UTC

kafka-consumer-groups.sh \ --bootstrap-server localhost:9092 \ --group my-consumer-group \ --topic orders-topic \ --reset-offsets \ --to-datetime 2025-10-12T10:00:00.000 \ --execute - Restart the consumer and let it process all messages from the affected period.

- Validate downstream systems to ensure all messages have been correctly handled without duplicates or errors.

Scenario 2 – Selective Replay for Non-Idempotent Systems (Event order doesn’t matter)

Problem characteristics:

- The Kafka consumer and the downstream components are not idempotent.

- Reprocessing messages that may have been already successfully processed will likely create duplicates or undesired side effects.

- Ordering requirements are not strict.

- Kafka offsets are sequential per partition, so you cannot reset offsets to selectively skip successfully processed messages and process only the failed messages.

Recommended steps:

- Create a new Kafka topic to hold messages that need to be replayed.

- Run a temporary consumer (or script) that reads messages from the affected offsets in the original topic.

- Filter out messages that were already successfully processed (using logs, IDs, or other metadata).

- Produce only the failed/unprocessed messages into the new replay topic.

- Start your original consumer on the new replay topic to process only the unprocessed messages.

- Validate that all previously unprocessed messages are handled correctly without creating duplicates or side effects.

Scenario 3 – Replay for Idempotent Consumers requiring event order

Problem characteristics:

- The Kafka consumer and the downstream components are idempotent.

- Your consumer or downstream systems depend on message ordering within a partition (e.g., balance updates or order status changes).

- Reprocessing out of order could cause invalid state transitions or inconsistent data.

- Idempotency alone does not protect against sequence violations — processing order still matters.

Recommended steps:

- Identify affected partitions and the earliest offset before the ordering issue occurred.

- Reset offsets only for the affected partitions to the chosen point using Kafka offset reset tools or APIs.

kafka-consumer-groups.sh \ --bootstrap-server <BROKER> \ --group <CONSUMER_GROUP> \ --topic <TOPIC> \ --partition <PARTITION_NUMBER> \ --reset-offsets \ --to-offset <OFFSET> \ --execute - Reprocess messages in strict partition order to preserve event sequencing.

- Validate downstream state to ensure ordering consistency post-recovery.

Comparison table

| Scenario | When it Applies | Replay Approach | Requirements | Considerations |

|---|---|---|---|---|

| Scenario 1 – Safe Full Replay for Idempotent Consumers (Order Doesn’t Matter) | All messages in the affected period can be safely replayed, including successfully processed messages | Replay all messages in all partitions from the affected period | Consumer and downstream systems must be idempotent | Minimal risk; ordering doesn’t matter; simple full replay works |

| Scenario 2 – Selective Replay for Non-Idempotent Systems (Order Doesn’t Matter) | Cannot safely replay all messages. Reprocessing previously successful messages could cause problems | Replay only failed/unprocessed messages (using temporary topic, filtering, or logs) | Must filter out successfully processed messages; consumer may not be idempotent | Risk of duplicates if filtering is incorrect; requires identifying failed messages accurately |

| Scenario 3 – Replay for Idempotent Consumers requiring event order | Ordering within partitions is critical; may include partially processed messages | Replay affected partitions in order from earliest safe offset | Consumer and downstream systems should ideally be idempotent; must preserve partition order | Out-of-order processing can corrupt state; careful offset selection required; more operational overhead |

Additional Scenarios

The scenarios we’ve covered—full replay, targeted replay, and replay while preserving event order—address the most common recovery strategies. However, some real-world situations can be more complex. For example, if a system is non-idempotent and also requires strict event ordering, recovery becomes significantly harder. In such cases, you may need to combine deduplication mechanisms, state tracking, or even manual partition-level recovery to ensure both correctness and sequence integrity. Ideally, redesigning your system toward idempotent, order-aware processing will simplify future reprocessing.

Additional Considerations

- Complex systems may require hybrid strategies If your consumer is non-idempotent and ordering matters, you may need a combination of targeted replay, partition-level recovery, and deduplication logic.

- Operational coordination is critical Recovery in these scenarios often involves careful coordination between producers, consumers, and downstream systems to avoid duplicates or out-of-order processing.

- Redesign for future resilience Whenever possible, aim for idempotent, order-aware processing to reduce complexity and simplify future recovery efforts.